AI Cybersecurity

AI and machine learning technology is rapidly being adopted by governments, businesses and individuals as they seek to leverage benefits from the transformative technology. However, as these systems become more pervasive, they are also emerging as targets for malicious actors. To fully realise the advantages of AI, it is essential for government, industry and academia to work together to secure these systems and protect society from AI-related threats. In support of this vision, the GCSCC is delivering an AI cybersecurity research programme aimed at investigating AI security risks while advancing economic prosperity.

Our Holistic Approach to AI Cybersecurity Resilience

A holistic cybersecurity approach is required to unlock the full potential of AI and improve AI cybersecurity resilience. AI systems do not exist in isolation, and therefore their security must be managed within their wider technical, economic and political context.

We are analysing AI cybersecurity challenges utilising a multi-faceted approach that enables us to obtain a more comprehensive understanding of the key enablers, dependencies, and dynamics shaping AI cybersecurity in a country.

Scales of AI Impact on Cybersecurity

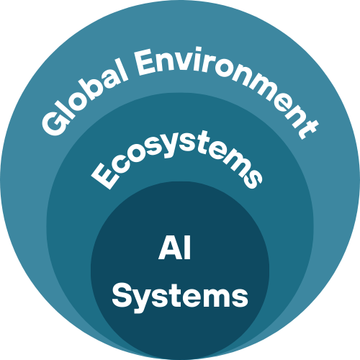

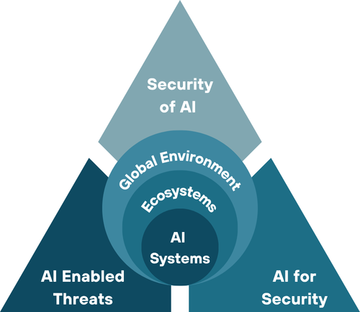

AI introduces new challenges to cybersecurity resilience at three interconnected scales, which inform the basis of our research and impact: within AI systems themselves; to the wider AI ecosystem; and the broader global environment.

- AI Systems: the cybersecurity challenges within the technical infrastructure and processes of AI.

- Ecosystems: the wider consequences and harms of AI on cybersecurity within and between entities, sectors and economies, including those which may extend beyond national boundaries.

- Global Environment: the global AI cybersecurity landscape of states and other transnational actors and the associated security factors.

Across these scales, AI in cybersecurity can be considered from three angles: the risks posed by growing AI adoption (Security of AI); the defensive opportunities provided by AI to mitigate cyber security risks (AI for security); and the impacts of developments in AI on the capabilities of threat actors (AI Enabled Threats).

- Security of AI: the risks to the cyber-resilience of systems which utilise AI (data poisoning, system compromise, etc).

- AI for Security: the use of AI to strengthen cybersecurity through technical and non-technical capabilities (e.g. enhanced detection, prevention, and response capacities; intelligence sharing; awareness and education programmes, etc).

- AI Enabled Threats: the improved capabilities AI may provide threat actors that enhance the speed, scale and sophistication of cyber-attacks (AI-assisted hacking, deepfake-based fraud, automated social engineering etc).

Our Research Streams

Our research programme investigates various risks and opportunities connected to the three scales and angles of AI cybersecurity. The outputs will deliver new capability options and insights for policy makers and cybersecurity practitioners, as well as contributing to the academic interest in the topic.

AI has various implications on domestic and global cyber resilience and requires new or updated cybersecurity capacities at the national and international levels. As a global issue, it is essentially for leading stakeholders to understand what these implications and capacities are. It is important to understand how these issues interrelate across and within national borders, and what actions they can take to build and maintain robust AI cybersecurity resilience in an interconnected world.

This research area is investigating national and international components of AI cybersecurity to identify the multifaceted risks and solutions. As part of this work, we have drafted a novel National AI Cybersecurity Readiness Metric. A diagnostic tool that will enable nations to understand their current state of AI cybersecurity capabilities and to identify priorities for cybersecurity capacity enhancement and investment across all dimensions of national cybersecurity capacity. The Metric will also help to lift awareness of AI cybersecurity risks amongst countries and contribute to a greater global understanding of the topic.

Affiliated Projects

This research focuses on investigating the consequences of AI security incidents beyond the immediate technical effects on accessed digital assets. Through it we aim to understand the propagation of incident harms and illuminate potential risks to individual organisations, industry supply chains, delivery pipelines and the AI ecosystem more broadly. It seeks to provide a critical lens to enable the exploration of impacts and mitigation measures by AI users and policy makers seeking to protect national infrastructure, supply chains and the overall economy.

Affiliated Projects

AI and Cyber: Balancing Risks and Rewards

The cybersecurity community lacks a comprehensive understanding of the nature of the AI system vulnerabilities. This knowledge gap limits the application of existing cybersecurity approaches to AI systems and the development of new methods. Our research explores the nature of vulnerabilities and their potential to be exploited with the aim of guiding practice and policy on their appropriate use. It investigates various AI model types, seeking to understand how different AI model architectures vary in their susceptibility to different types of cyberattacks and investigate correlations between the weaknesses, risks and severity of AI system compromises.

Project Partners

The National AI Cybersecurity Readiness Metric is part of a programme of ongoing work the GCSCC is undertaking with the UK Government.